Google and OpenAI computing power torrents push DRAM/NAND demand to exponentially expand, and Micron (MU.US) welcomes “AI infrastructure super dividend”

The Zhitong Finance App learned that the stock price of US memory chip giant Micron Technology (MU.US) has risen sharply by about 180% so far this year, driven by the “storage supercycle”, which is an extremely strong bull market logic. Micron even announced in early December that it would stop selling storage products to individual consumers in the PC/DIY market in order to focus on providing storage production capacity for large-scale AI data centers being built on a large scale, and even announced in early December that it would stop selling storage products to individual consumers in the PC/DIY market, highlighting that as the global AI infrastructure boom is in full swing, demand for high-performance data center-level DRAM and NAND series products continues to surge.

Wells Fargo (Wells Fargo), a well-known investment institution on Wall Street, recently released a research report saying that whether it is Google's huge TPU AI computing power cluster or a massive Nvidia AI GPU computing power cluster, it is inseparable from HBM storage systems that require full integration with AI chips, and that currently tech giants must purchase server-level DDR5 storage and enterprise-grade high-performance SSD/HDD on a large scale to accelerate the construction or expansion of AI data centers; and Micron is stuck in these three core storage areas at the same time: HBM and server DRAM (including DDR5/LPDDR5X) and high-end data center SSDs are one of the most direct beneficiaries of the “AI memory+storage stack”, and can be described as receiving “super dividends” from AI infrastructure.

The world's two largest storage giants - Samsung Electronics and SK Hynix, as well as storage giants such as Western Digital and Seagate, have recently announced strong results, causing Wall Street banks such as Morgan Stanley to chant the “storage supercycle”, highlighting the continued blowout in the world's AI training/inference computing power demand and the consumer electronics demand recovery cycle driven by the end-side AI boom, which is driving the exponential expansion of demand for DRAM/NAND series storage products, especially DRAM, which accounts for the highest share of the Micron storage business Segmented HBM storage and server-level high-performance DDR5; in addition, demand for enterprise-grade SSDs in the NAND sector has also recently surged.

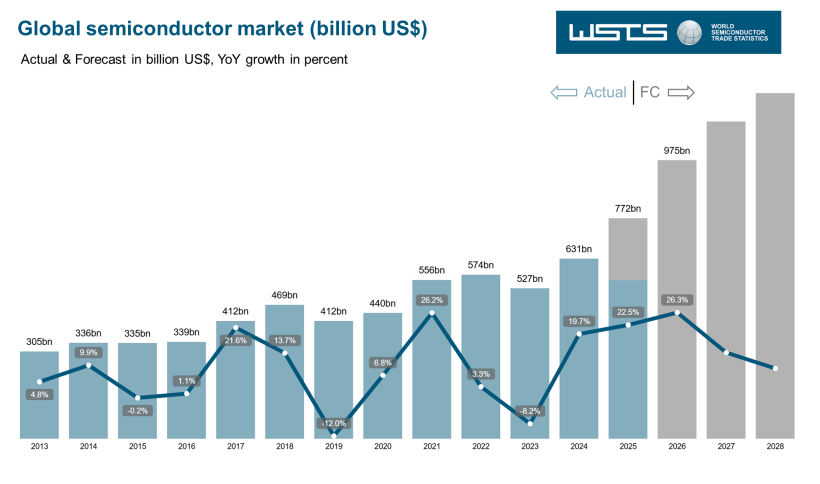

According to the latest semiconductor industry outlook data recently released by the World Semiconductor Trade Statistics Organization (WSTS), the global chip demand expansion trend is expected to continue to be strong in 2026, and MCU chips and analog chips, which have continued to weaken since the end of 2022, are also expected to enter a strong recovery curve.

WSTS expects that after a strong rebound in 2024, the global semiconductor market will grow by 22.5% in 2025, with a total value of 772.2 billion US dollars, which is higher than the forecast given by WSTS in spring; the total value of the semiconductor market in 2026 is expected to expand significantly to 9755 billion US dollars on the basis of strong growth in 2025, close to the market size target of 1 trillion US dollars in 2030 predicted by SEMI, which means it is expected to increase 26% year over year.

According to Wall Street giants Morgan Stanley, Citi, Loop Capital, and Wedbush, the global AI infrastructure investment wave with AI computing power hardware at the core is far from over and is only at the beginning. Driven by an unprecedented “AI inference computing power demand storm”, the scale of this round of AI infrastructure investment, which will continue until 2030, is expected to reach 3 trillion to 4 trillion US dollars.

The DRAM market will surge in 2026, and Micron is one of the biggest beneficiaries

Wells Fargo said in its latest research report that as overall sales in the DRAM industry, including HBM storage systems, are expected to increase by more than 100% year over year in 2026, the US-based storage giant — and the world's third-largest memory chip manufacturer with a relatively high contribution to the DRAM business, will be one of the biggest beneficiaries. Wells Fargo reiterated its target price of up to $300 for Micron, as well as the “Overweight” rating. As of the US stock close on Tuesday, Micron's stock price closed at $232.51.

“Market research agency TrendForce has raised its forecast for total DRAM industry revenue for the CY25 and Cy26 calendar years—currently estimated at around US$165.7 billion (representing +73% YoY) and US$333.5 billion (+101% YoY), respectively.” Aaron Rakers (Aaron Rakers), a senior analyst at Wells Fargo Bank, wrote in a report to clients.

“This is a significant upgrade from TrendForce's previous predictions for CY25 and CY26 (November 2025). At that time, expectations were US$162.6 billion (meaning +70% YoY) and US$306 billion (+85% YoY), respectively. Therefore, we reaffirm our positive bullish stance on Micron and are optimistic about the results that Micron will release after the market on December 17 EST.”

Lax added that TrendForce is positive about Micron's process upgrades over the next year. These higher-end 1gamma (1-gamma) process nodes, which focus on the DRAM chip product line, are expected to climb the slope and expand to 38% of total bit value output by the end of 2026, compared to only 12% in 2025. As a comparison, analyst Lax added that the Wells Fargo research team's predictions for the world's two largest memory chip makers, Samsung Electronics and SK Hynix, show that by the end of 2026, their high-end 1gamma-equivalent DRAM processes will account for 11% and 25%, respectively.

Micron - “Google AI Chain” and “OpenAI Chain” are inseparable from it

As ChatGPT developer OpenAI launched GPT-5.2, which is “ridiculously strong” in deep reasoning and code generation to compete with Gemini 3 launched by Google in late November, the “pinnacle AI duel” between Google and OpenAI reached the climax chapter of the duel story between the two sides.

From an investment perspective, OpenAI and Google respectively represent the two most popular investment lines in the global stock market right now — the “OpenAI Chain” and the “Google AI Chain”. According to Wall Street, the three “super investment themes” in the stock market can be called the “strongest beneficiary theme” between Google and OpenAI, which may continue to clash for many years. The two are strong rivalries or one party enters an absolute leading edge, all of which are long-term benefits for these three themes — these three themes are high-speed data center interconnection (DCI), optical connectivity, and enterprise level High performance storage.

When we dismantle the “OpenAI Chain” and the “Google AI Chain”, we can find that the former basically corresponds to the “Nvidia AI Computing Power Chain” (with the AI GPU technology route as the core), while the latter corresponds to the “TPU AI computing power industry chain” (with the AI ASIC technology route as the core), leaving aside the two major routes of GPU and ASIC, whether it is the “InfiniBand+ Spectrum-X/Ethernet” high-performance network infrastructure dominated by Nvidia, or the “OCS” led by Google (that is Optical Circuit Switching)” The reason behind high-performance network infrastructure is storage product leaders focusing on enterprise-grade high-performance storage systems in data centers.

Similar to Wells Fargo's point of view, Mizuho (Mizuho), another Wall Street financial giant, also believes that American storage giant Micron will be one of the biggest beneficiaries of the accelerated expansion of Google's TPU AI computing power cluster and the continued large-scale increase in demand for “Nvidia” Blackwell/Rubin AI GPUs. Judging from the nearly $1.4 trillion AI computing power infrastructure cumulative agreement and the “Stargate” AI infrastructure process that OpenAI has signed, these super AI infrastructure projects all urgently require large-scale data center enterprise-grade high-performance storage (with storage products such as HBM systems, enterprise-grade SSD/HDD, and server-level DDR5 as the core). Growth is driving the demand and sales price of almost all storage products and the stock prices of major storage companies to skyrocket.

In the large-scale AI data center being led by OpenAI, Google, Microsoft, and Meta, in addition to the HBM storage system created by 3D stacking DRAM, server-level DDR5 is also a core storage resource that is “just needed” and must be purchased on a large scale. The two complement rather than replace it. Currently, the DRAM capacity of AI server computing power clusters that support Tianliang AI training/inference computing power requirements is usually 8-10 times that of traditional CPU servers. Many stand-alone machines have already exceeded 1TB of DDR5, and are clearly migrating to DDR5, mainly because DDR5 has increased bandwidth by about 50% compared to DDR4, which is more suitable for heavyweight AI workloads.

Nasdaq

Nasdaq Wall Street Journal

Wall Street Journal