Sup AI Sets New Benchmark Record with 52.15% on Humanity's Last Exam

Surpasses every individual frontier model on the world's hardest open-source AI reasoning test

Important Disclosure: This is an independent evaluation conducted by Sup AI and is not officially endorsed, validated, or recognized by the Center for AI Safety, Scale AI, or the HLE benchmark creators. Sup AI is not affiliated with CAIS or Scale AI.

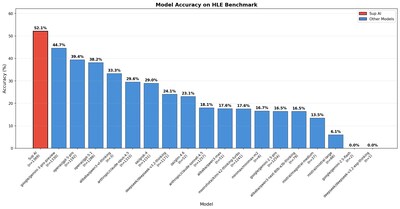

PALO ALTO, Calif., Dec. 10, 2025 /PRNewswire/ -- Sup AI announced today that its multi-model orchestration system has achieved 52.15% accuracy on Humanity's Last Exam (HLE), the most challenging publicly available benchmark for advanced AI reasoning. This performance establishes Sup AI as the new state-of-the-art (SOTA), outperforming all individual frontier models including Google's Gemini 3 Pro Preview, OpenAI's GPT-5 Pro and GPT-5.1, Anthropic's Claude Opus 4.5, and xAI's Grok-4.

HLE is designed to resist saturation as AI capabilities improve, blending advanced mathematics, scientific reasoning, and logic into 2,500 expert-crafted questions. Crossing 50% accuracy on this benchmark marks a significant milestone in the progression of general AI reasoning capabilities.

Note: All models evaluated, including Sup AI, use enhanced evaluation settings such as custom instructions, web search, and low-confidence retries. These settings raise every model's score relative to published benchmarks, but relative rankings remain stable, and Sup AI maintains a clear lead.

Sup AI's Results at a Glance

Metric |

Value |

Accuracy |

52.15 % |

Questions Evaluated |

1,369 |

Lead Over Next Best Model |

+7.49 points |

ECE (stated-confidence calibration) |

35.22 % |

Sup AI's score is statistically significant at p < 0.001, with a 95% confidence interval of ±2.65 percentage points.

A Clear Lead Over Frontier Models

Sup AI's ensemble system consistently outperformed every major standalone model:

Model |

Accuracy |

Sup AI |

52.15 % |

Gemini 3 Pro Preview |

44.66 % |

GPT-5 Pro |

39.43 % |

GPT-5.1 |

38.18 % |

Claude Opus 4.5 |

29.56 % |

DeepSeek v3.2 Thinking |

24.08 % |

This margin underscores a core principle: an orchestrated ensemble, when engineered properly, can outperform its strongest component models by a wide margin.

Why Sup AI Wins: Ensemble Intelligence

Sup AI dynamically routes each question to a set of frontier models most suited to the problem, analyzes probability distributions across their outputs, and synthesizes an answer weighted by confidence, specialization, and inter-model agreement. If confidence is insufficient or models disagree meaningfully, Sup AI automatically retries.

Sup AI also enables multimodal handling even for models that lack native support, pre-processing images or PDFs when required.

The result is not a simple vote. Instead, it's a structured, confidence-weighted synthesis that consistently outperforms every individual model.

About Humanity's Last Exam

Humanity's Last Exam (HLE) is a high-difficulty benchmark developed by independent researchers to evaluate deep reasoning, mathematical problem-solving, scientific understanding, and multi-step logic. With 2,500 public questions and no "trivial" shortcuts, HLE remains one of the few benchmarks where frontier models do not cluster near human-expert-level performance.

Sup AI evaluated 1,369 randomly selected questions, using standard Sup AI chat settings identical to what any user would access through the platform.

Leadership Perspective

"Crossing 50% on HLE isn't about luck. It's about architecture," said Ken Mueller, CEO of Sup AI. "No single model dominates every domain, but an orchestrated system that understands when to trust, when to weight, and when to retry can. Sup AI shows that careful ensemble engineering can push beyond the ceiling of any standalone model."

Evaluation Methodology

- Each question (text and image) was submitted to the Sup AI API using the platform's normal system prompt.

- Responses were structured into explanation, answer, and a self-reported confidence score.

- GPT-5.1 served as the automated judge, evaluating answer correctness via strict extraction, semantic equivalence, and numerical-tolerance matching.

- Accuracy and calibration metrics were computed using established statistical estimators.

Sup AI's complete evaluation code, predictions, judged outputs, metrics, and per-question results are publicly available for full reproducibility.

Significance

Sup AI's performance demonstrates:

- It is possible to meaningfully surpass top individual models with an ensemble system.

- Specialization matters — different models dominate different domains; orchestration captures these strengths.

- Benchmarks like HLE remain valuable, showing substantial headroom even as AI surpasses 50% accuracy.

- These capabilities are available today through Sup AI's production API and chat interface.

Availability

The full evaluation, code, and results can be accessed at:

GitHub Repository: |

|

Sup AI Platform: |

|

HLE Benchmark: |

Sup AI invites researchers, engineers, and enterprise teams to independently reproduce the results and explore the platform's orchestration capabilities.

Citation

@misc{supai-hle-2025,

title={Sup AI Achieves 52.15% on Humanity's Last Exam},

author={Sup AI},

year={2025},

url={https://github.com/supaihq/hle}

}

For press inquiries or partnership discussions, please contact: support@sup.ai

![]() View original content to download multimedia:https://www.prnewswire.com/news-releases/sup-ai-sets-new-benchmark-record-with-52-15-on-humanitys-last-exam-302637675.html

View original content to download multimedia:https://www.prnewswire.com/news-releases/sup-ai-sets-new-benchmark-record-with-52-15-on-humanitys-last-exam-302637675.html

SOURCE Sup AI

Nasdaq

Nasdaq Wall Street Journal

Wall Street Journal